Automating Eliza's Documentation

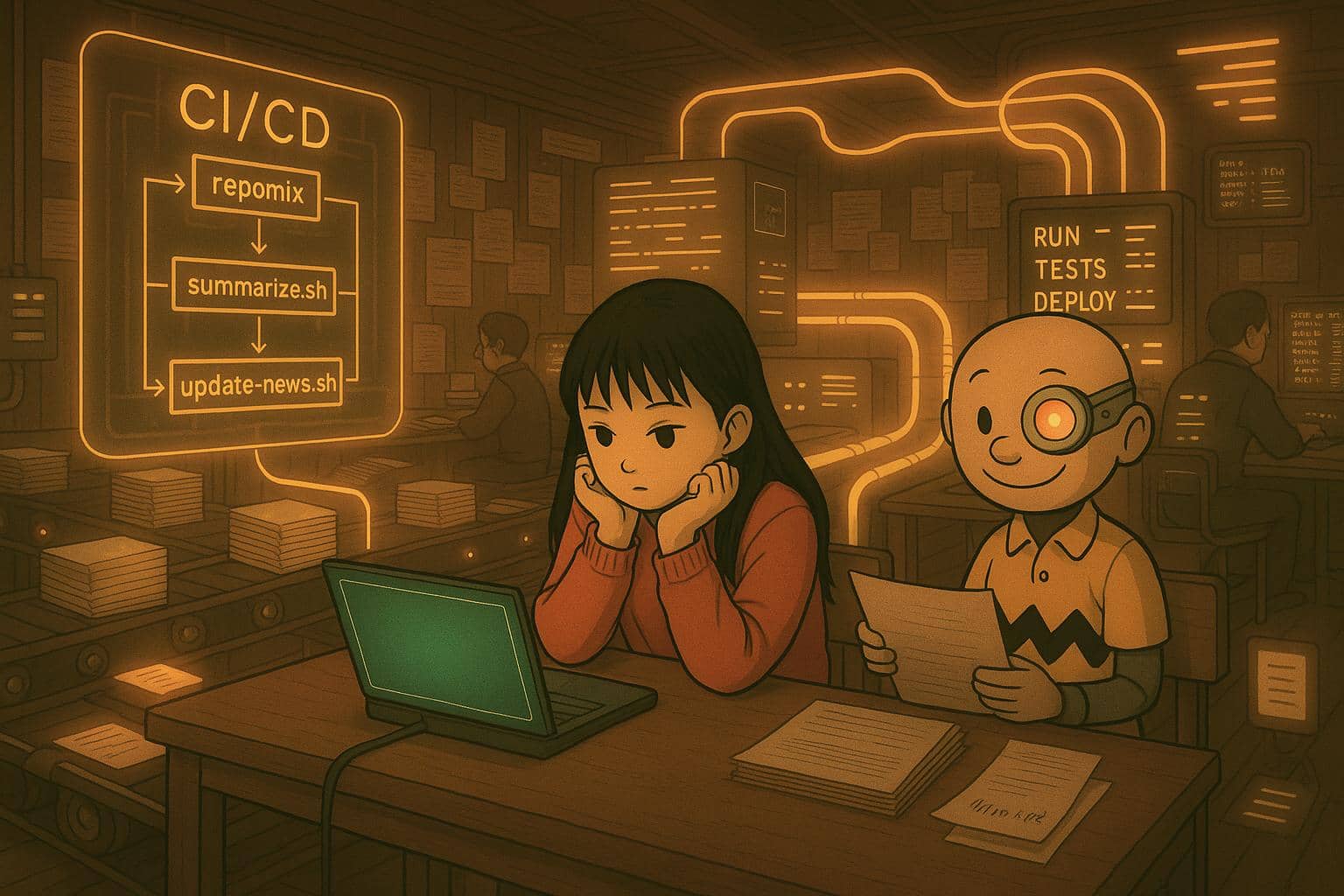

Our previous post, "Tools for Taming Information," highlighted the need for effective knowledge management in a dynamic project like Eliza. This follow-up explores the 'how': our CI/CD practices for automating documentation.

By treating docs as code, we boost consistency, accuracy, update speed, and reduce manual work for contributors, building a more robust and accessible knowledge base for everyone.

The Broader Information Ecosystem

Eliza thrive on information from diverse sources (GitHub, Discord, etc.). Efficiently capturing and processing this data is vital. The diagram below shows our information ecosystem, where data streams are collected, AI-enriched, and centralized in our knowledge repository. This post focuses on the "📚 docs/" portion and its automation.

The following diagram illustrates this broader flow, showing how various data streams are collected, processed, enriched (often with AI), and centralized into our knowledge repository. This repository then feeds various consumption layers, including AI agents, human users, and visual dashboards.

Details

While this diagram captures the full scope, this article will zoom in on the "📚 docs/" portion within the "KNOW (Central Repository)" and how our CI/CD pipelines automate its maintenance and enrichment.

Our documentation itself lives within the packages/docs directory and is built using Docusaurus, a static site generator optimized for creating clean, accessible documentation sites. Key configuration files like docusaurus.config.ts define the site's structure, plugins, themes, and navigation, including sidebars managed via sidebars.ts.

We manage several key types of content:

- Core Documentation: Conceptual explanations, guides, and tutorials for ElizaOS.

- API Reference: Automatically generated from our TypeScript code comments.

- REST API Docs: Derived from OpenAPI specifications.

- Packages: Documentation for individual adapters, clients, and plugins.

- Partners & Community: Information related to our ecosystem partners and community initiatives.

- Blog & News: Regular updates, articles, and aggregated news from the AI and ElizaOS space.

Static assets, such as images, diagrams, and important text files like llms.txt (a context file for AI models, found in packages/docs/static/), are also managed within this structure.

With this context in mind, let's delve into how automation helps us keep this diverse set of documentation current and accurate.

Automating Content Generation & Updates: The Mechanics

Maintaining accurate and timely documentation requires robust automation. Here's a concise look at the key mechanisms we employ:

News Aggregation & Syndication

Freshness in our news section is critical. The update-news.sh script, orchestrated by the update-news.yml GitHub workflow, automates this.

- Process: It fetches new Markdown articles daily from a predefined source (currently AI News for elizaOS).

- Integration: New news files are added to

packages/docs/news/and therepomix.config.jsonis updated. This ensures our AI context files (see below) also benefit from the latest news, enhancing the knowledge of our AI assistants.

AI Context Files: llms.txt & llms-full.txt

These files are crucial for providing our AI systems (like documentation Q&A bots or RAG agents) with relevant, up-to-date context about ElizaOS. We use Repomix, a powerful tool that packs your entire repository into a single, AI-friendly file, perfect for when you need to feed your codebase to Large Language Models (LLMs).

- Generation: The

llmstxt-generator.ymlworkflow usesrepomix, configured byrepomix.config.jsonandrepomix-full.config.json. - Content:

repomixscans specified project files and documentation, compiling them intopackages/docs/static/llms.txt(a general overview) andpackages/docs/static/llms-full.txt(a more comprehensive technical deep-dive). - Impact: This ensures our AI can answer questions based on the latest code and documentation without manual re-training on every minor change.

JSDoc & Typedoc

The jsdoc-automation.yml workflow leverages our autodoc package to help maintain JSDoc comments in our TypeScript codebase. These comments are then processed by the Docusaurus Typedoc Plugin (configured in docusaurus.config.ts) to generate the browsable API reference in /api. This plugin integrates TypeDoc into the Docusaurus lifecycle, presetting relevant typedoc-plugin-markdown options and generating a configurable Docusaurus sidebar.

- OpenAPI for REST: Our REST API documentation in

/docs/restis generated from an OpenAPI specification file (packages/docs/src/openapi/eliza-v1.yaml) usingdocusaurus-plugin-openapi-docs. This ensures the API docs are always in sync with the defined contract.

README Translations via AI

To broaden accessibility, the generate-readme-translations.yml workflow uses an AI model to translate our main project README.md into multiple languages. These translations are then committed to the repository.

Supporting Scripts

Several other scripts in packages/docs/scripts/ handle more specialized tasks:

update-partner-pages.js: This script automates the creation and maintenance of individual documentation pages for our partners, located underpackages/docs/partners/. It reads partner data (name, description, logo, website, social links, etc.) frompackages/docs/src/data/partners.tsx. For each partner, it generates a standardized Markdown page, ensuring consistency and ease of updates by modifying a central data source.summarize.sh: A versatile command-line utility that leverages AI models via the OpenRouter API to summarize various file types, including text, JSON, and even MP3 audio (which it first transcribes usinginsanely-fast-whisper). This script can be used to quickly generate summaries of content. For instance, when new videos are uploaded (e.g., to YouTube),summarize.shcan be used to transcribe and summarize the content, forming the basis for new documentation pages. It offers options for custom prompts, model selection, and output file specification.get-changelog.py: Helps in generating changelogs from repository history.

Dynamic Package Showcase (eliza.how/packages)

Our package showcase is a key resource for discovering ElizaOS adapters, clients, and plugins. Its content is dynamically generated through an automated process:

- Registry Fetching: The

update-registry.jsscript periodically fetches the list of available packages from the central ElizaOS Plugins Registry. - Custom Data Merging: This script enriches the registry data with custom descriptions and preview image paths defined in

plugin-descriptions.json. This file allows us to provide more curated information than what might be available directly from GitHub repository descriptions. - Data Generation: The processed data is then used to generate

registry-users.tsx, which exports a structured array of all packages. - Display Logic:

users.tsxconsumes this array, defines tags, and handles sorting for the showcase. - Rendering: Finally, the React components in

packages/docs/src/pages/showcase/(primarilyindex.tsxand its sub-components) render the interactive showcase. - AI-Assisted Summaries: The

plugin_summary_prompt.txtcan be used withsummarize.shto help generate or update descriptions withinplugin-descriptions.json, further streamlining content maintenance for the showcase.

This automated pipeline ensures the package showcase remains comprehensive and up-to-date with minimal manual intervention.

These automated processes form the core of our "docs-as-code" philosophy, reducing toil and improving the quality and timeliness of our documentation.

Automation Backbone: GitHub Actions

All this automation is powered by our CI/CD pipelines, defined using GitHub Actions. These workflows, located in our .github/workflows/ directory, are the engine that keeps our documentation building, testing, and deploying smoothly.

Key workflows ensure documentation integrity and timely updates:

- Core CI Checks (

ci.yaml,pr.yaml): On every push or pull request, these workflows run essential checks. For documentation, this includes linting Markdown files, checking for broken links, and ensuring the Docusaurus site builds successfully. This catches errors early, before they reach the live documentation. - Automated Content Generation Triggers: As detailed in the previous section, workflows like

update-news.yml,llmstxt-generator.yml,jsdoc-automation.yml, andgenerate-readme-translations.ymlare triggered on schedules or specific events (e.g., pushes to main branches) to automatically update relevant documentation sections or supporting files. - Deployment: The Docusaurus site is automatically deployed upon merges to the main branch using the command

USE_SSH=true bun run deploy. This ensures that approved changes are reflected on the live documentation site (eliza.how/docs) promptly.

This CI/CD setup not only automates repetitive tasks but also enforces quality standards, ensuring that our documentation remains a reliable resource.

Living Documentation: Challenges, Learnings & Future Vision

Treating documentation as code and automating its lifecycle is a journey, not a destination. While our current setup significantly improves efficiency and consistency, we continuously learn and identify areas for enhancement.

Current Challenges & Learnings

Our docs-as-code pipeline is robust, but we're always working to make it better. This means focusing on:

- Speed: Making sure docs build quickly

- Quality: Double-checking any content written by AI to ensure it's accurate and clear

- Teamwork for Tools: Keeping all the different software parts and scripts working together smoothly, especially as we add new things

Future Vision & Enhancements

Our goal is to make the documentation process even more seamless, intelligent, and contributor-friendly. We're exploring enhancements like:

- Smarter Triggers: Targeting CI jobs to run only when relevant files change.

- Smarter Link Checks: Moving beyond basic broken link detection to validating link relevance.

- Enhanced AI Assistance: Using AI to identify outdated docs or suggest improvements based on search queries.

- Automated Video Documentation: Using

summarize.shto transcribe, summarize, and draft PRs for new video content, plus automatingVideoGalleryupdates. - Automated Plugin Descriptions: Using CI and

summarize.shto propose updates toplugin-descriptions.jsonwhen new plugins or README changes are detected. - Faster Feedback Loops: Optimizing workflows for quicker contributor feedback on PRs.

- AI-Driven Content Strategy: Using site analytics to guide AI in suggesting or outlining new documentation.

We're always refining these processes. Your contributions and feedback via GitHub or Discord are invaluable in helping us build an even better documentation experience, we're listening!